The Eye of the Beholder

by Michael Agresta

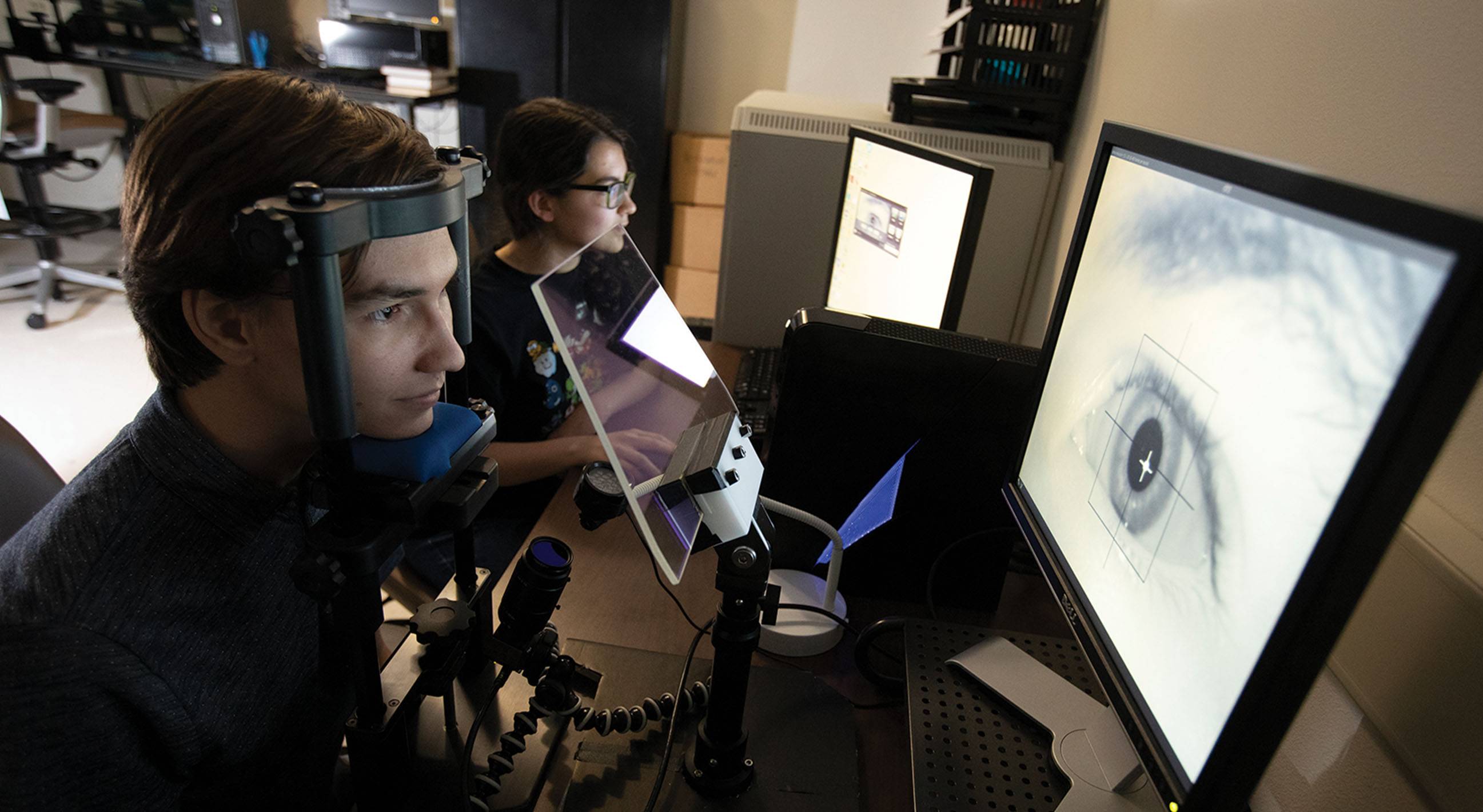

Oleg Komogortsev sees a future where his eye-sensor technology is essential to daily life

From today’s perspective, the idea of computer systems that track our tiniest eye movements may seem like a far-off futurist’s dream. It wasn’t so long ago that only Silicon Valley entrepreneurs and technologists could imagine us walking around with tiny supercomputers in our pockets, navigating the world using the internet.

If Dr. Oleg Komogortsev, associate professor of computer science, is correct, the next big shift in mobile computing and gaming might be widespread adoption of eye-sensor technology — and his research is building that future. “Right now, I’m working to make it possible so we will have, let’s say, at some point in the future, billions of these sensors,” Komogortsev says. “I think it will be possible as a part of virtual and augmented reality platforms.”

Komogortsev has been collecting high-level awards for his research into the human eye and eye-sensor technology, including two Google Virtual Reality Research Awards in support of his work at Texas State and a 2014 Presidential Early Career Award for Scientists and Engineers. This latter award is “the highest honor bestowed by the United States government on science and engineering professionals in the early stages of their independent research careers,” according to the White House. It was given to Komogortsev in recognition of his research in cybersecurity with an emphasis on eye movement-driven biometrics and health assessment.

Komogortsev envisions multiple applications for eye sensors in near-future consumer technology. The first reason they may be widely adopted is security. Because the movements of the human eye are far more complex, individuated, and difficult to artificially replicate than the human fingerprint, Komogortsev predicts that eye sensors will soon replace fingerprint sensors as the biometric security technology of choice.

Ninety percent of the brain is involved in the process of vision. That makes building a fake version of a user’s eye impossible for hackers — because mimicking an individual’s unique patterns of eye movements would be nearly as complex as mimicking an entire brain. “For somebody to be able to spoof the system, they would have to accurately replicate the internal structure of the brain and the eye, which is impossible with the current technological state of our civilization,” Komogortsev says.

A second application for eye-sensor technology — and perhaps the “killer app” that will lead to its mass adoption in consumer technology — is its importance in improving graphics for virtual reality (VR) and augmented reality (AR) systems. A large, expensive bandwith-hogging system may not need eye sensors, but to build systems that are light, mobile, cheap, and better at conserving power and working through limited internet connectivity, eye sensors can be a big help. That’s because of a process called “foveated rendering,” an emergent graphics technology that ensures wherever the user’s eye is looking inside a VR headset or AR wearable device, the graphics quality is maximized at that exact point. While display areas near the user’s focal point are rendered high-quality and crystal-clear, display areas in the user’s peripheral vision are allowed to be blurrier, like a lower-resolution photograph.

This process mimics how the human eye works. “For the user, there is no change in the perceptual quality because, the way the human eye operates, you only see quite well at the point you’re looking at, and the periphery is blurred,” Komogortsev says.

VR and AR devices will require eye sensors to be able to know which way the user is looking and render graphics accordingly. By skimping on graphic resolution in peripheral-vision areas of the display, the device can save on battery power and bandwidth, making mobile VR and AR possible. “That’s why Google was interested,” Komogortsev says. “In their Cardboard division, you have the form factor of an inexpensive VR platform that is powered by the mobile phone.”

The third and final application for Komogortsev’s eye-sensor research in consumer technology is health tracking. Many diseases and ailments, from concussions to Parkinson’s, are known to have symptoms involving changes in eye movement patterns. If we were walking around with devices that tracked our eye movements, those sensors could perform the bonus service of keeping an “eye” out for such changes.

“Let’s say you have a VR platform that you’re using on an everyday basis, and let’s say a person starts developing some neurological condition — for example, multiple sclerosis,” Komogortsev says. “It will be possible to alert the user to go and see a doctor. Then, the medical professional can make a much more accurate decision about what is happening to the user.” He reasons that this intervention could lead to earlier detection, earlier treatment, and better outcomes for those who suffer from neurological ailments.

Komogortsev acknowledges that medical privacy is an important concern in this automated diagnosis scenario. That’s not his knot to untangle, however. As a university based researcher, he’s happy to keep his focus on the research, not the questions of how to bring it to market. “We do have hardware prototypes in the lab that we are developing, but those prototypes are for the purpose of just showing to the industry that this is possible,” he says. “My hope right now is that companies such as Google or Facebook, or others, would see value in this type of work and develop products around it.” ✪

UPDATE: 02/06/2020

This spring, Oleg Komogortsev, associate professor of computer science, and his team will present their project during the 2020 SXSW Innovation Lab event.